Bots and Website Scrapers can be a serious annoyance to anyone who is responsible for administering a website and the more sites and servers you are responsible the more of a problem it can become. Every request a bot or scraper makes requires some amount of resources on the web server hosting the site, the more requests that are made, the more resources are needed to process those requests leaving less resources for actual visitors. Which raises the question of how legitimate or desired is this traffic and what can you do about it?

Detecting this type of activity

The main place to look to detect this type of activity is in the web server logs. If you have something like Splunk or ELK, it makes this part a breeze. If not, Log parser should be able to help quickly narrow things down by using it against IIS logs. Bots and scrapers tend to generate large amounts of 404 errors, so looking for multiple 404s from a single IP will generally yield some valuable information. Another thing to look for is IP addresses who have made an unusually large amount of requests against the servers which would indicate possible indexing or scraping.

The User-Agent associated with the request will usually contain some useful info about the requester, so make sure you are logging this field. Bots will typically use something like “Mozilla/5.0+(compatible;+Googlebot/2.1;++http://www.google.com/bot.html)” for the User-Agent. Searching ELK for *bot* and then applying various fields to the search can also help narrow the results down quickly revealing valuable information.

Robots.txt and its limitations

The robots.txt file placed at the root of each site can help prevent search engine bots who respect the boundaries defined within the file from indexing and crawling the site. For example the following in the robots.txt would allow Google bot to index the entire site but not any other bots:

User-agent: Googlebot

Disallow:

User-agent: *

Disallow: /

The downside to using the robots.txt is that we can only ask the bot to respect the boundaries, this does not mean it actually has to. This file is also viewable by anyone and it not only lists who you don’t want scanning the site but also the directories that are forbidden, which to an attacker, would usually be places of interest.

Blocking the requests with IIS and URL Rewrite

One way to mitigate or block this type of activity on IIS 7+ is to use URL Rewrite to inspect the incoming request for a signature and then do something with the request like redirect it, show an error or even just abort it. In this case, the User-Agent can be used for the signature since it is typically present and unique enough to not accidently flag legitimate traffic. If you are using ARR in front of the web servers as a load balancer you can apply this rule there instead.

- Download and install the URL Rewrite module if you have not already.

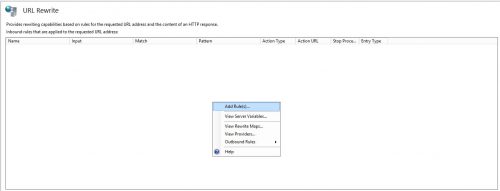

- Open IIS Manager and select either the server level or site level, the double click on the URL Rewrite Module in the center pane.

- Right click and select Add Rule(s)…

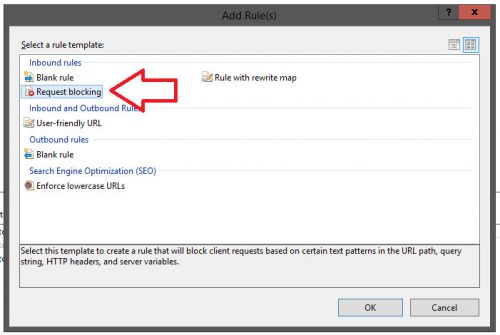

- Select Request Blocking and then click OK.

- On the Add Request Blocking Rule, select the following:

Block access based on: User-agent Header

Block request that: Matches the Pattern

Pattern (User-agent Header): *Googlebot*

Using: Wildcards

How to block: Abort RequestClick OK when complete.

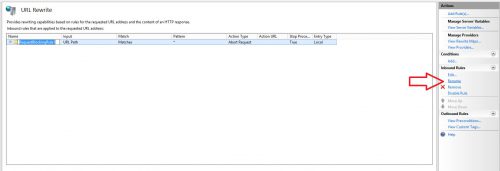

- Click on the rule and then click Rename on the right hand side, name the rule something appropriate for reference sake.

This rule will block any requests that contain the string Googlebot anywhere in the User-Agent by aborting the request with no error or status code. You can put any user agent or a portion of a user agent that you want to block in the Pattern field. The * before and after the string means match anything before or after the string. So *Googlebot* would match if the User-Agent was “Mozilla/5.0+(compatible;+Googlebot/2.1;++http://www.google.com/bot.html)”.

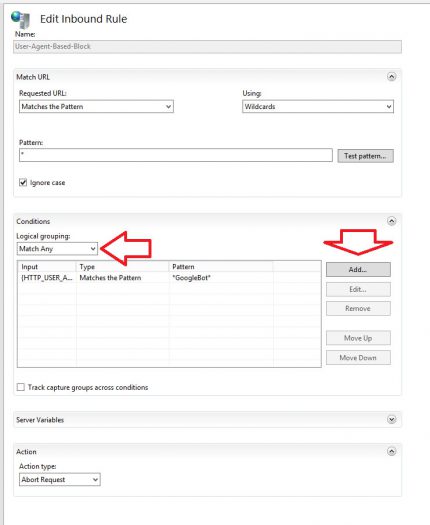

Now let’s customize this rule and make it a bit more versatile by having it check for multiple agents.

- Select the rule and on the right hand side click Edit.

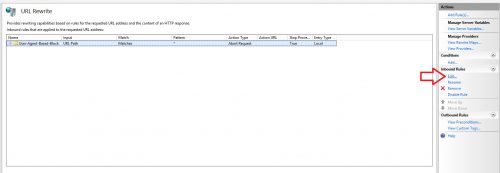

- Under Conditions change the Logical grouping to “Match Any” and click Add…

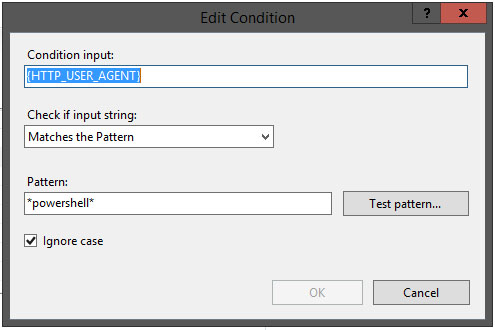

- Enter the following settings on the Add Condition window:

Condition input: {HTTP_USER_AGENT}

Check if input string: Matches the Pattern

Pattern: *powershell*

Ignore case: CheckedClick OK when complete.

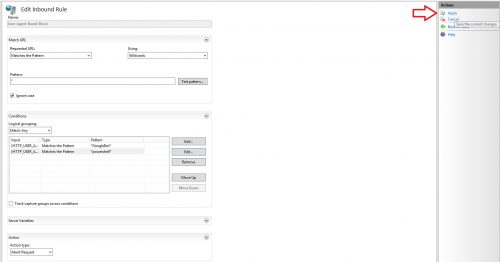

- There should now be multiple entries under conditions, click apply to save settings.

This time I used *powershell* for the pattern to test with. When using the powershell command invoke-webrequest against a site it should use something similar to “Mozilla/5.0+(Windows+NT;+Windows+NT+6.3;+en-US)+WindowsPowerShell/4.0” for the User-Agent. This should match the pattern set in our rule since it does contain the word powershell.

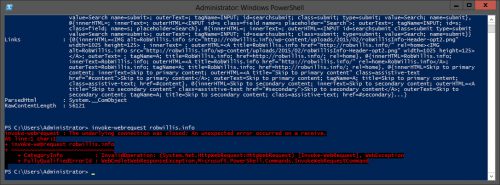

Testing with the rule disabled just to see how it looks when the request is not blocked:

And now with the URL Rewrite rule enabled:

We can see that a match was made and the request was aborted as expected.

Summary

This approach can be very effective and if you are using IIS Shared Configuration it is also very scalable as all of this information is stored in the main IIS configuration files when applied at the server level. The rules themselves are very flexible and easy to maintain.