In Pt. 3 of my setting up ELK 5 on Ubuntu 16.04 series, I showed how easy it was to ship IIS logs from a Windows Server 2012 R2 using Filebeat. One thing you may have noticed with that configuration is that the logs aren’t parsed out by Logstash, each line from the IIS log ends up being a large string stored in the generic message field. This is less than ideal and makes it considerably more work to sort and search the data to find useful information.

The easiest way to parse the logs is to create a Grok filter that detects the document type (iis) set in the Filebeat configuration and then match each field from the IIS log. Once you have a filter you can then place it on the Logstash server in the /etc/logstash/conf.d/ directory created in the previous post. It can be a tedious process matching all of the fields but it is well worth it once you get right.

You can find more information about Grok here:

https://www.elastic.co/guide/en/logstash/current/plugins-filters-grok.html

If you are writing your own Grok filters, I highly recommend the following site for testing filters:

http://grokconstructor.appspot.com/do/match

The IIS Grok filter that I created and used in the video can be downloaded here:

11-IIS-Filter.zip

IIS Server Configuration

There are a few configuration changes that need to be made on the IIS server to make sure the IIS Log fields match up with the Grok filter used here or else Grok will fail to parse the log. The Filebeat configuration will also need updated to set the document_type (not to be confused with input_type) so this way as logs are ingested they are flagged as IIS and then the Grok filter can use that for its type match.

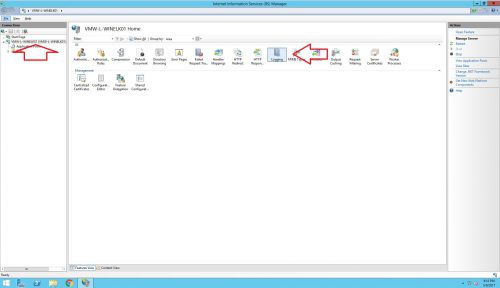

1.) Open IIS Manager, click on the server level on the left hand side and then click on Logging in the center pane.

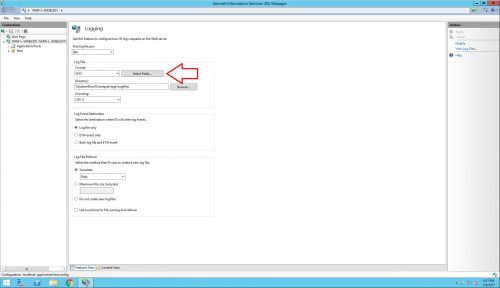

2.) Under the Log File section where it says Format:, leave the default W3C and click the Select Fields button.

3.) Make sure that ALL of the fields are selected. This part is important since the Grok filter I made is expecting to be matched up with all of these fields. If you want to remove specific fields, they will also need to be removed from the Grok filter.

4.) Open the filebeat.yml configuration file located in the root of the Filebeat installation directory, in my case this will be C:\ELK-Beats\filebeat-5.x.x\, and add document_type: iis to the config so it looks similar to the following:

filebeat.prospectors:

# Each - is a prospector. Most options can be set at the prospector level, so

# you can use different prospectors for various configurations.

# Below are the prospector specific configurations.

- input_type: log

paths:

- C:\inetpub\logs\LogFiles\*\*

document_type: iis

You can find more information on the filebeat configuration options here:

https://www.elastic.co/guide/en/beats/filebeat/current/configuration-filebeat-options.html

5.) Save the file and restart the Filebeat service.

ELK Server Configuration

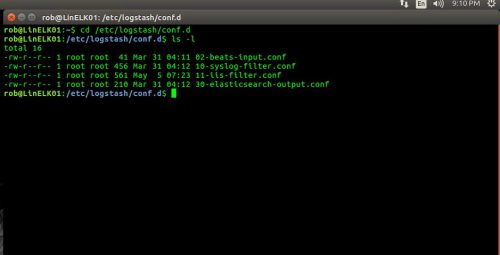

In Pt. 2 of my ELK 5 series I created a few Logstash filters on the Linux machine in /etc/Logstash/conf.d and that is also where the new Grok filter is going to go.

If you would like to download the IIS Grok Filter I made rather than create it manually you can find it here:

11-IIS-Filter.zip

1.) To manually create the Grok Filter on the ELK/Linux machine, run the following command to create and open a file named 11-iis-log-filter.conf in the /etc/logstash/conf.d directory:

sudo nano /etc/logstash/conf.d/11-iis-log-filter.conf

2.) Copy and paste the following configuration into the file:

filter {

if [type] == "iis" {

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:log_timestamp} %{WORD:S-SiteName} %{NOTSPACE:S-ComputerName} %{IPORHOST:S-IP} %{WORD:CS-Method} %{URIPATH:CS-URI-Stem} (?:-|\"%{URIPATH:CS-URI-Query}\") %{NUMBER:S-Port} %{NOTSPACE:CS-Username} %{IPORHOST:C-IP} %{NOTSPACE:CS-Version} %{NOTSPACE:CS-UserAgent} %{NOTSPACE:CS-Cookie} %{NOTSPACE:CS-Referer} %{NOTSPACE:CS-Host} %{NUMBER:SC-Status} %{NUMBER:SC-SubStatus} %{NUMBER:SC-Win32-Status} %{NUMBER:SC-Bytes} %{NUMBER:CS-Bytes} %{NUMBER:Time-Taken}"}

}

}

}

Note that the match line should remain one long string, don’t break it up into multiple lines.

3.) Save the file and exit nano.

4.) Restart Logstash:

sudo systemctl logstash restart

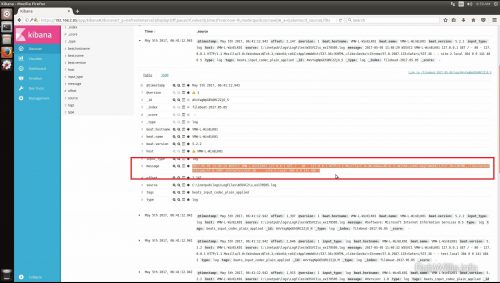

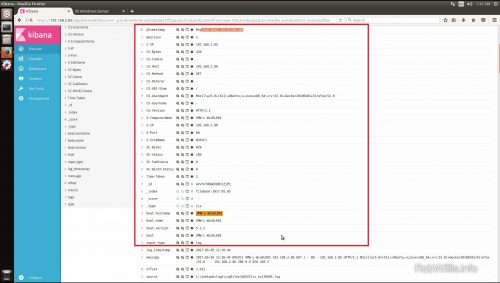

Verifying the Filter is Working

Log in to Kibana and filter the search down to the last 15 minutes and add the host who is sending the IIS logs. Only new logs will be parsed by the filter, so if the web server is not busy you may want to browse the site to generate some fresh logs.

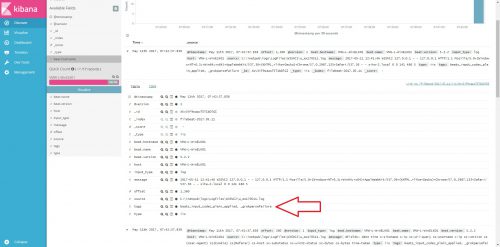

As you can see in the screen shot the fields are all parsed out as expected and can now easily be added to searches. If the filter doesn’t match up with the log you should see a _grokparsefailure message displayed in tags field:

If this happens the easiest thing to do is take a few entries from the IIS logs and run them through the filter with Grok tester like the one found here:

http://grokconstructor.appspot.com/do/match

And that is where I am going to end this post. There is some additional information covered in the video so be sure to check that out if you haven’t already!