Intro

Recently, I have been spending a lot of time researching and working with PowerShell logging. Since PowerShell is readily available (built-in to the OS) and has an assortment of functionality that can be used across the entire kill chain right out of the box, it is an ideal candidate for virtually any type of attack scenario. As a blue teamer, these logs can be an invaluable resource providing a level of visibility that is not available in any EDR (Endpoint Detection and Response) solution that I have seen. As a red teamer, it can be extremely useful to know how PowerShell based tools look as they are logged and help aid in attempting to avoid detection.

Unfortunately, it often seems when it comes to getting started with PowerShell logging, there can be some confusion and uncertainty around what logs are available, where the logs are, enabling the additional logging, what exactly gets logged, and how it will all look from an end user perspective. So that is why I am writing this post, in attempt to put all of my recent research into a single place with plenty of visual examples while also covering some of the nuances and issues that will come up along the way.

If you would like more information on PowerShell based tools and attacks, the Mitre technique page for PowerShell is packed with information:

Mitre ATT&CK Technique T1086 – PowerShell

What to monitor and where?

One of the biggest things to recognize about PowerShell is that it is basically an entire tool kit that is available to everyone from non-admin users on Windows workstations all the way up to Windows servers where privileged accounts are often used. This makes PowerShell extremely attractive to leverage from the very beginning of a penetration test or attack type scenario, even with a low privilege account.

The point I am trying to make here is that it is just as critical to monitor workstations as it is servers. The downside to this is that there are often considerably more workstations than servers in a given environment, and when ingesting all of this data into a central location or SIEM (Security Information and Event Management), volume will quickly become a primary concern.

With all of this in mind, one of the goals for this post is to target the most valuable data from these logs, while attempting to keep the overall volume as reasonable as possible.

What logs are available?

The following command will list what PowerShell Logs are available in the Event Viewer:

PS> Get-WinEvent -ListLog *PowerShell*

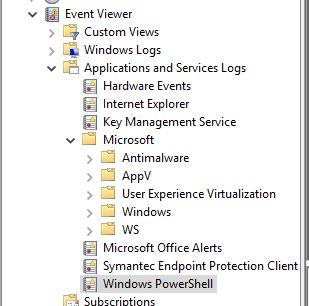

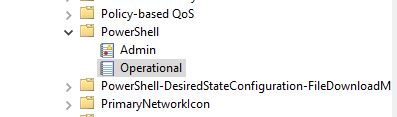

These are the main PowerShell logs to monitor:

- Windows PowerShell

- Microsoft-Windows-PowerShell/Operational

The Windows PowerShell log can be a mixed bag, varying greatly with what gets logged and how, it also seems to fluctuate depending on the version of PowerShell in use. Not to mention, it can be very high in volume. Instead, I would suggest focusing on the Microsoft-Windows-PowerShell/Operational log since it tends to be highest value and lowest volume. Narrowing things down a little bit more, there are two event ID’s to focus on in this log, Module (4103) and Script block (4104).

There are also the PowerShell Transcription logs which are not saved to the event log but instead as flat text files. I will talk about these in more detail in just a little bit, but for now I want mention that the default location for these logs is C:\Users\%USERNAME%\Documents\. These logs are saved with a unique name and time stamp to prevent collisions.

Sounds great, but what does this all look like?

Let’s take the following sample script and see how it looks as it runs through the PowerShell pipeline and how it gets logged:

-

Script block Logs – Event ID 4104

- Script block logs show all of the commands and/or source for any PowerShell ran on the system along with the user who ran it and the path to the script. It is identical to the the sample script source and even has the comments. Longer scripts are broken up into multiple events with numbered chunks of script block. Think of this log as having all of the details as to what ran on the system.

-

Module Logs – Event ID 4103

- Module logs show how PowerShell commands/code are executed in PowerShell, capturing tons of useful information like variable initialization and other useful data not captured by any of the other logs. Module log data will be logged as each command is processed, creating individual events for each one. In this example, the given script generated 20 – 4103 events, with each of these events containing portions of the script as it was expanded across the pipeline. This behavior is important to keep in mind as this can lead to bursts in log volume depending on what is running at a given time. This type of logging has been available since PowerShell 3.0. Think of this log as having the details of how PowerShell executed on the system.

-

Transcription Logs

- Transcription logs are more of an over the shoulder style log as you will see everything that was input or output to the PowerShell console during the session. These can be extremely useful for determining if the commands/script ran successfully as error output is captured. While these logs are very useful to have, due to the format they present additional challenges with parsing when ingesting to SIEM.

When it comes to central logging, I would recommend at the very least ingesting the 4104 (script block) logs, and if the storage/network bandwidth is available, it is worth grabbing the 4103 (module) logs as well. With these two events together, it should be fairly easy to create high fidelity alerts while also providing plenty of additional evidence/artifacts to string together the series of events that took place.

Transcription logs make a great addition to the event data, but as mentioned due to the format they present additional challenges with parsing the data and add to the concern around storage/network bandwidth. My recommendation here, would be to at least store the Transcription logs on a central share, if possible. This way if there is a time where it is decided that a Transcript log is needed based off of the analysis of the 4103/4104 events, the data is still readily available and easily retrievable.

PowerShell 5.0 auto logging

By default, PowerShell does not log everything and to get the most out of the logging capabilities there are additional policies that need to be enabled. However, it is worth noting that starting with PowerShell 5.0, anything that is determined as suspicious by AMSI (Antimalware Scan Interface), will automatically log a Script block/4104 event with a level of Warning. If script block logging is explicitly disabled, these events will not be logged.

To demo the auto logging behavior, let’s take the same script that was used in the initial demo that was not flagged, but this time run it with -ExecutionPolicy Bypass:

This is what the log looks like:

Of course not everything flagged at the warning level is going to be malicious, but it is a great place to start when hunting through logs for potentially malicious activity.

Enabling the additional PowerShell logging policies

The following policies will enable PowerShell to log Event ID 4103 (Module), 4104 (Script block), and Transcription logs.

These policies can be found under the following section in the Group Policy Management Editor console:

Computer Configuration > Policies > Administrative Templates > Windows Components > Windows PowerShell

The following GPO settings need to be enabled:

| Turn on Module Logging: | Enabled |

| Turn on PowerShell Script Block Logging: | Enabled |

| Turn on PowerShell Transcription: | Enabled |

The Script Block Logging policy is pretty straight forward, just enable the policy and I would suggest leaving the box for invocation start / stop events (4105/4106) unchecked. The invocation events can be very high in volume and provide little value:

For the Module logs, I generally recommend starting off capturing everything, which is represented by Microsoft.PowerShell.*:

Ideally, the Transcription logs should be configured to go to a central share:

The link below is to a Microsoft blog that explains how to set up the permissions on a central share for this exact reason. Check out the section about the Over-the-shoulder transcription – outputdirectory:

PowerShell ♥ the Blue Team

Restricting access to the PowerShell logs

One setting that often gets over looked is that the PowerShell logs in the Event Viewer are readable by interactive users by default. So in the typical situation on an Active Directory domain, where you have low privileged (Non-Admin) user accounts logging into workstations interactively, this is a less than ideal setting as the logs can potentially contain sensitive information like passwords that have been entered or hard coded in clear text (bad practice!).

The Windows Security log has a considerably better default configuration, limiting access to Administrators or higher. That makes this setting a great place to start when building out the initial baseline settings for restricting access to the PowerShell logs.

The permissions for event logs are configured through security descriptors. If you have never worked with security descriptors, you can find a wealth of information to help understand them here:

Security descriptors, part 1: what they are, in simple words

Additionally, all of the information to further break down the individual portions of a security descriptor can be found here:

Security Descriptor Definition Language

First let’s take a look at the security descriptors for the PowerShell logs using the following command:

PS> Get-WinEvent -ListLog *PowerShell* | Format-Table -Property LogName, SecurityDescriptor

As you can see in the screenshot above, the security descriptors for all of the PowerShell logs are on the long side and seem to have a few extra permissions. This includes the interactive users (IU) group mentioned earlier.

Now let’s take a look at the security descriptor for the Windows Security log:

PS> Get-WinEvent -ListLog Security | Format-Table -Property LogName, SecurityDescriptor

The security descriptor for the Security log is noticeably shorter:

O:BAG:SYD:(A;;0xf0005;;;SY)(A;;0x5;;;BA)(A;;0x1;;;S-1-5-32-573)

I also want mention that there is a super useful cmdlet named ConvertFrom-SddlString that can be used to break down security descriptor strings:

PS> ConvertFrom-SddlString “O:BAG:SYD:(A;;0xf0005;;;SY)(A;;0x5;;;BA)(A;;0x1;;;S-1-5-32-573)”

There is one gotcha with using the Security log security descriptor, it will work fine for restricting access to the Microsoft-Windows-PowerShell/Operational log but the Windows PowerShell log requires one additional SID:

ALL_APP_PACKAGES – S-1-15-2-1

The updated security descriptor for the Windows PowerShell log would look like this:

O:BAG:SYD:(A;;0x2;;;S-1-15-2-1)(A;;0xf0005;;;SY)(A;;0x7;;;BA)(A;;0x1;;;S-1-5-32-573)

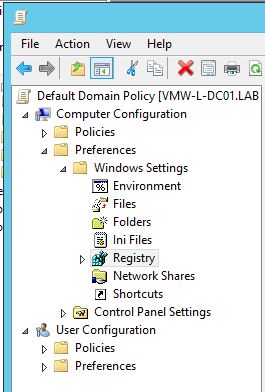

Updating security descriptors via GPO

In order to push out the updated security descriptor permissions the following Group Policy can be used:

Computer Configuration > Preferences > Windows Settings > Registry

From there, right click in the center pane and go to New > Registry Item.

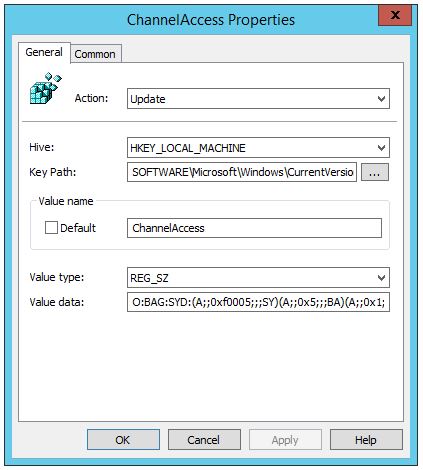

Two separate items need to be created, one for each log, with the following settings:

Microsoft-Windows-PowerShell/Operational log:

| Action: | Update |

| Hive: | HKEY_LOCAL_MACHINE |

| Key Path: | SOFTWARE\Microsoft\Windows\CurrentVersion\WINEVT\Channels\Microsoft-Windows-PowerShell/Operational |

| Value Name: | ChannelAccess |

| Value Type: | REG_SZ |

| Value Data: | O:BAG:SYD:(A;;0xf0005;;;SY)(A;;0x5;;;BA)(A;;0x1;;;S-1-5-32-573) |

Windows PowerShell log:

| Action: | Update |

| Hive: | HKEY_LOCAL_MACHINE |

| Key Path: | SYSTEM\CurrentControlSet\Services\EventLog\Windows PowerShell |

| Value Name: | CustomSD |

| Value Type: | REG_SZ |

| Value Data: | O:BAG:SYD:(A;;0x2;;;S-1-15-2-1)(A;;0xf0005;;;SY)(A;;0x7;;;BA)(A;;0x1;;;S-1-5-32-573) |

This is what it should look like with the items created:

Finally, it is time to verify the logs are restricted to non-admin accounts:

It is important to keep in mind that these logs can potentially contain sensitive information when moving on to collecting the logs centrally, access to the data will need to be restricted there as well.

Downgrade attacks

PowerShell 2.0 is officially deprecated, and you should do everything you can to remove it from your environment. The following Microsoft blog post has a ton of useful information around this topic, along with ways to check to see it if is enabled/installed:

Windows PowerShell 2.0 Deprecation

PowerShell 2.0 requires .Net 2.0/3.5, which as of Windows 8 and Server 2012 is no longer installed by default but can be added as an additional package later on. Unfortunately, .Net 3.5 is a requirement for Microsoft Office, so if you are running Office you more than likely have .Net 3.5 installed and more often than not PowerShell 2.0 will also be available.

Why is this a concern when it comes to PowerShell logging? PowerShell 2.0 doesn’t support most of the logging features, so by downgrading to v2 in what is referred to as downgrade attack, logging can be evaded.

Let’s take a look at what this looks like from a logging perspective. The most common ways to downgrade is to use one of the following commands, the first one will drop into a full PowerShell 2.0 session:

PS> powershell.exe -Version 2 -ExecutionPolicy Bypass

While the initial command is observed in the logs as a 4104 event, the $PSVersionTable command that followed was missed entirely and so would any additional commands. This does still allow for some room to create an alertable condition based off of the initial event.

The second example will run a single command or script block under the PowerShell 2.0 engine, returning to the current version when complete:

PS> powershell.exe -Version 2 -ExecutionPolicy Bypass -Command {script block/command}

Since the command was entered inline, the entire string was captured as a 4104 event. As you can see from the output though, it was indeed executed under the 2.0 engine.

This is one of the few situations where the Windows PowerShell log can be useful. Lee Holmes has an excellent post on leveraging this log for detecting downgrade attacks:

Detecting and Preventing PowerShell Downgrade Attacks

This is how a downgrade attack is logged under Event ID 400 in the Windows PowerShell log when using the commands mentioned above:

The main takeaway from this section is that while downgrade attacks can be detected, it still creates a visibility gap and you should do everything possible to remove the PowerShell 2.0 engine.

Obfuscation

One of the greatest benefits of PowerShell 5.0 is that it introduces deep script block logging, which means it will break down most obfuscated PowerShell into more human readable chunks of data as it is processed by PowerShell engine. The following blog has some really great information relating to this particular feature under the Deep script block logging section:

PowerShell ♥ the Blue Team

I also want to mention another post that has some additional information that is really useful in understanding what obfuscated PowerShell code can look like and how the deobfuscated code can be combined with AMSI for enhanced detections:

Windows 10 to offer application developers new malware defenses

This all sounds awesome, but it can be a little tough to find more examples of what this looks like in the actual logs and if there are any limitations or gotchas. After learning about this behavior and then reviewing logs of malicious activity, you may come across an event like the following and wonder if this even really works:

The thing to understand here is that PowerShell is going to break the code down, stripping away the layers of obfuscation as the code is processed through the pipeline, logging each iteration individually. While one event may be heavily obfuscated, it is the events that follow that you will want to pay attention to.

Let’s take a look at what this looks like by using the simple script I used in the beginning of this post for the initial logging examples and combining it with something like Invoke-Obfuscation from Daniel Bohannon. If you are interested in this particular topic, I highly suggest checking out Daniel’s blog and any of his talks that you can find online, he has done some pretty amazing research:

https://www.danielbohannon.com/

Taking the initial demo script:

Loading Invoke-Obfuscation into the PowerShell session:

PS> Import-Module -name .\Invoke-Obfuscation.psd1

PS> Invoke-Obfuscation

Now to obfuscate the script:

Invoke-Obfuscation> set scriptpath c:\users\administrator\desktop\demo.ps1

Invoke-Obfuscation> compress

Invoke-Obfuscation\Compress> 1

This is what it looks like as it is logged:

And then in one of the 4104 events that follows, we see the deobfuscated script block:

This behavior can be a little less obvious when reviewing logs in a SIEM, but it helps once you understand what is actually going on under the hood.

Estimating PowerShell log storage requirements

Now that we see the value of the PowerShell logs, know what the data looks like, and what events to monitor, it is time to start thinking about collecting this data into a central location or SIEM. When adding a new log source like this, it is always a good idea to have a rough idea of how much storage is going to be required.

There are two ways to go about this with the PowerShell logs:

- Option 1: Use the data that is already available in the local logs to build out some basic statistics and sizing information. This is a great way to get some initial numbers, unfortunately Windows doesn’t really provide a simple way to do this out of this box.

- Option 2: Start ingesting logs from a few machines to get some sample data to run statistics on and gather sizing information. This method will provide very accurate data, but also has quite a few moving parts which will take time and resources for the implementation.

Ideally, you would really want to go with Option 1 and then follow up with Option 2. To help make the first option a little more feasible of an approach, I am releasing a proof of concept tool with this post that uses PowerShell to help analyze the logs. You can find this tool, Get-PSLogSizeEstimate, on my GitHub:

Get-PSLogSizeEstimate

Here is the basic idea of how the estimate is calculated:

- The size of the log divided by the total number of events in the log = The average event size

- Retention period divided by timespan of the newest and oldest events in the log = Log Rotations per

the defined retention period -

Building off the data from the two previous steps:

Number of events x average event size x estimated log rotations per specified rentention period =

Estimated storage requirement

Use the following command to load the script into the current PowerShell session:

PS> . .\Get-PSLogSizeEstimate.ps1

Get-PSLogSizeEstimate accepts the following command line parameters:

- -EventID / -e: Required, the event ID’s to filter out, ex 4103, 4104.

- -Retention / -r: Required, the retention period in days, ex 30.

- -ComputerName / -c: Optional, a remote machine to gather the PowerShell logs from, ex Win-DC01.

-

-OutputFile / -o: Optional, the name of the file to save the output to, ex test-output.txt.

Default value – Get-PSLogSizeEstimate-output.txt.

Example usage:

PS> Get-PSLogSizeEstimate -EventID 4103,4104 -Retention 30 -ComputerName DC01 -OutputFile Get-PSLogSizeEstimate-DC01.txt -Verbose

And the shorthand version:

PS> Get-PSLogSizeEstimate -e 4103,4104 -r 30 -c DC01 -o Get-PSLogSizeEstimate-DC01.txt -Verbose

Here is what the output looks like:

As you can see from the output, by filtering the data down to just 4103 and 4104 events, it saves about .5 MB in this particular scenerio. Keep in mind, while this may not seem like much of a difference, this is a lab machine that is not very busy and the sizing gap between all events vs filtered events will likely grow significantly on production machines.

Let’s take a quick look at the output from a busier machine, like the one that I have been writing and running this script on:

As you can see from the output, the estimate drops from just over 23MB for 30 days of retention to under 3MB by targeting the events with the most useful data.

Wrap Up

I think that pretty much covers everything you need to know to get up and running with PowerShell logging. At this point you should have the basic idea of how to enable the logging, what logs/events to monitor, what that data looks like, and some of the gotchas around this type of logging.

If you are interested in getting these logs into a central SIEM like the Elastic stack, I have another series of posts that covers setting up ELK (Elasticsearch, Logstash, and Kibana) and then gathering these logs (and a few others) from remote hosts with Winlogbeat:

Installing ELK 7 (Elasticsearch, Logstash and Kibana) – Windows Server 2016 (Part I)

Gathering Windows, PowerShell and Sysmon Events with Winlogbeat – ELK 7 – Windows Server 2016 (Part II)